International Conference on Machine Learning (ICML),

2013

Topic Model Diagnostics: Assessing Domain Relevance via Topical Alignment

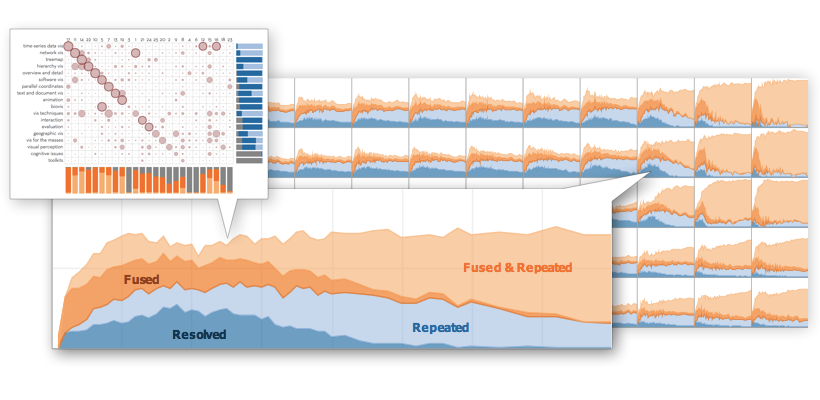

We present a framework to support large-scale assessment of topic models. On the top left, the correspondence chart visualizes the alignment between human-identified concepts and machine-generated latent topics. We then introduce a process to automate the calculation of topical alignment, so that analysts can compare any number of models to known domain concepts and examine the deviations.

abstract

The use of topic models to analyze domain-specific texts often requires manual validation of the latent topics to ensure that they are meaningful. We introduce a framework to support such a large-scale assessment of topical relevance. We measure the correspondence between a set of latent topics and a set of reference concepts to quantify four types of topical misalignment: junk, fused, missing, and repeated topics. Our analysis compares 10,000 topic model variants to 200 expert-provided domain concepts, and demonstrates how our framework can inform choices of model parameters, inference algorithms, and intrinsic measures of topical quality.